| Type | Personal Specialization Project at The Game Assembly. |

| Engine | My graphics engine built as part of the education at The Game Assembly. |

| Timeframe | 6 weeks (20h/week) from February 2025 |

| Intent | Learn about optimization through compute shaders by pushing the object count in a boid simulation. |

| Github repository. |

Table of Contents

- Background

- Implementation

- The Simulation Program

- Performance Comparisons

- What I've Learned

- Credits & Inspiration

Background

This first section aims to give a brief introduction the the concepts of boids and compute shaders. If you already have a grasp of that, feel free to skip ahead to the implementation.

What are Boids?

The word “boid” is short for “bird-oid object”. In a simulation, every boid moves around in a space while following a set of rules. From this, more complex flock behaviors emerge.

The main rules boids follow are:

- Cohesion: Steer towards the center of the flock.

- Separation: Steer away from nearby boids.

- Alignment: Steer towards the average move direction of the flock.

A flock, in this instance, is whatever is within the boid's visual range, that is how far they see.In short, every boid needs to consider the positions and headings of boids within their visual range.

About Compute Shaders

A compute shader is exactly that: a shader that computes. They run on the GPU, hence a shader, and make use of the large number of parallel processors to do general purpose computations.

Since boid simulations require many of computations, compute shaders are the perfect tool for the job.

Compute shaders are run in thread groups by calling a dispatch function. The parameters of the dispatch call define the number of thread groups, while the compute shader describes the number of threads per group. Every thread in every group executes the instructions of the compute shader. Threads within a group share local memory (which is fast) and can synchronize easiy, while threads in different groups lack direct synchronization and and can only work together on global memory (which is slower). Threads and groups have IDs that map to the total number of threads and groups.

Memory on the GPU needs to be reserved to hold the required data. Blocks of data are copied from the CPU to the GPU using buffers. Buffers are grouped elements of fully typed data, which allows the GPU to interpret them correctly. The buffer data can also be copied back to the CPU.

Without going into further detail about the different kind of buffers, most important to note here is that copying data between the CPU and the GPU is slow and should be avoided when possible.

Implementation

In this section, iterations of the program will be presented chronologically. Performance comparsions between the versions can be found at the bottom of the post.

Version 1: Using the GPU

Version 1 of this program simply makes use of a compute shader for boid computations. Since the GPU both simulates and renders the boids, no data needs to be transferred from the GPU to the CPU. On the GPU, a boid is represented with 32 bytes of data. The buffer boidsIn holds the input data that is used for compututation. The results are stored in boidsOut.

struct Boid {

float3 pos; //Position

uint cellIndex; //Added in Version 2.

float3 vel; //Velocity

uint flockSize; }; //Used for altering the color of the boid when rendered.

RWStructuredBuffer<Boid> boidsIn : register(u0);

RWStructuredBuffer<Boid> boidsOut : register(u1);

cbuffer frameBuffer : register(b0); //Simulation data: number of boids, visual range etc.

The compute shader is dispatched with one thread per boid. Each thread loops over all boids in the simulation to generate an output. The thread ID is used as an index for writing to the boidsOut buffer. Every frame, a std::swap() is performed to swap the data in boidsIn and boidsOut.

Since every boid considers every boid, we're fighting a losing battle against math as we increase the boid count. The number of comparisons needed are the number of boids to the power of two. We can do better.

Version 2: Gridding with Counting Sort

To limit the number of boids compared, the second version empoys a grid for spatial indexing. Every boid end up in a grid cell somewhere in 3D space. The grid cells are cubes with sides with the length of the boid's visual range. This limits the comparisons between boid in the same- and neighboring cells.

In order for the GPU to work efficiently, we want boids in the same grid cell to be stored sequentially in the buffer. Even though the grid exists in 3D, all grid cells get an index ranging from 0 to the number of grid cells minus 1.

To sort the boids, an algorithm called counting sort is employed. The boids of each cell are counted, and the prefix sums (aka cumulated sums) of boids in for each grid index are calculated. This gives us a sum buffer that can be accessed with a grid index to get the indices in the boid buffer.

To visualize this, here is an example of:

- 64 grid cells.

- 128 boids, evenly distributed 2/ cell.

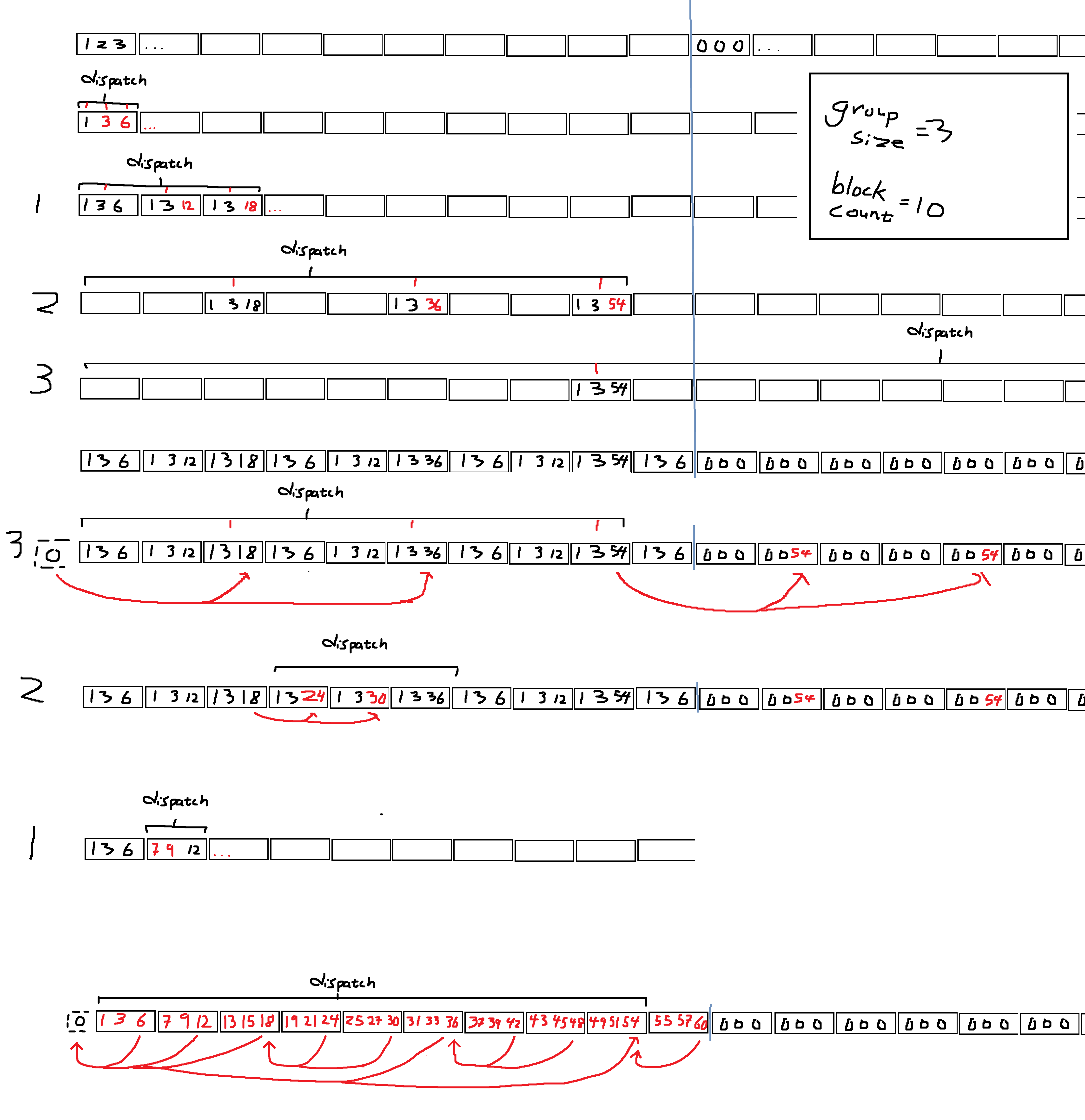

Version 3: Parallel Prefix Sum

While version 2 speeds up boid computations as the number of grid cells increases, it also adds sorting time. As the relationship between the boid's visual range and a side of the grid changes linearly, the number of grid cells changes cubically. Version 2 calculated the sum buffer with one thread, going adding the boid count in cell 0 to cell 1, then cell 1 to cell2 and so on. Even though it&aposs simple addition, the cubic scaling of the number of operations scales horribly.

To tackle this, version 3 uses a parallel prefix sum algorithm. After the boids per grid cell have been counted, thread groups are dispatched to simultaneously calculate the prefix sums of separate blocks of the sum buffer. If needed, we can go several layers deeper, dispatching groups to calculate the perfix sums of the blocks, creating bigger block and calculating the sums of those bigger blocks etc. This part is called sweeping up.

We then sweep down block sums to the blocks, until all prefix sums have been updated.

This is quite hard to visualize with millions of cells and initial block sizes of 512 or 1024, so here is a simplified version with the following parameters:

- 64 grid cells

- 64 boids, evenly distributed 1/ cell.

- Block size 8, 4 threads per thread group

The Simulation Program

The program is built using premake and Visual Studio. While running the program, simulation settings can live updated through an ImGui interface. Settings include boid behavior, grid parameters and graphics. There is a controllable camera and a player boid. The other boids react to the player boid by avoiding or following. For detailed information on the settings, check out the readme.

If you don't have time to check out the program, here are a few examples of playing around with the settings.

Default simulation settings.Live adjusting grid and cohesion.

Using gravity to create “boid water”.

Performance Comparisons

All tests were done on Nvidia GeForce GTX 1080 with an constant even distribution of boids within the grid.

PIX was used to get detailed information on the dispatches of the compute shaders.

The cell counts are noted as x³ to more easily convey the visual range of the boid in comparison to the grid length which is 1 divided by x.

Version 1 - Using the GPU

| Boid Count | Boid Computations (ms/frame) | ||

|---|---|---|---|

| 10,000 | 2.7 | ||

| 50,000 | 47.1 | ||

| 100,000 | 202.5 |

Version 2 - Gridding with Counting Sort

| Boid Count | Cell Count | Boids/ Cell | Sorting (ms/frame) | Boid Computations (ms/frame) | Total (ms/frame) |

|---|---|---|---|---|---|

| 100,000 | 10³ | 100 | 0.19 | 6.0 | 6.19 |

| 100,000 | 20³ | 12.5 | 1.2 | 0.98 | 2.0 |

| 100,000 | 50³ | 4 | 18.7 | 0.29 | 18.99 |

| 1,000,000 | 20³ | 125 | 1.56 | 69.3 | 70.86 |

| 1,000,000 | 50³ | 40 | 19.55 | 7.1 | 26.65 |

| 1,000,000 | 100³ | 1 | 155.3 | 3.0 | 158.3 |

VERSION 3 - Parallel Prefix Sum

| Boid Count | Cell Count | Boids/ Cell | Sorting (ms/frame) | Boid Computations (ms/frame) | Total (ms/frame) |

|---|---|---|---|---|---|

| 1,000,000 | 20³ | 125 | 0.33 | 69.4 | 69.73 |

| 1,000,000 | 50³ | 40 | 0.53 | 6.45 | 6,98 |

| 1,000,000 | 100³ | 1 | 0.67 | 2.76 | 3,43 |

| 1,000,000 | 400³ | 0.016 | 11.8 | 4.73 | 16,53 |

| 5,000,000 | 100³ | 5 | 2.65 | 28.41 | 31,06 |

| 5,000,000 | 400³ | 0.08 | 14.9 | 7.78 | 22,68 |

| 10,000,000 | 100³ | 10 | 5.35 | 79.9 | 85,25 |

| 10,000,000 | 400³ | 0.16 | 18.25 | 15.83 | 34,08 |

What I've Learned

I went into this project wanting to understand what compute shaders are and how they can be used. To start, I didn't understand the hardware, and tried to program like on the CPU. I was overly focused on reducing the number of computations and optimized for every thread to do minimal work. After understanding the GPU better, I could see that the number of computations isn't always the problem. I started looking into preparing the buffers for better data locality, which in trun led me to the counting sort algorithm.

My biggest struggle during this project was working out the parallel prefix sum. I had great resources to draw inspiration from, but found the ideas difficult to implement. I ran into issues where boids disappeared or the frame-rate would drop massively, without understanding why. After some time I realized that in order to move forward, I needed to take a step back.

Since I had a hard time debugging the GPU, I set up a test environment with a thread pool on the CPU. Doing this, I could verify functionality while gaining a deeper understanding of the execution. Once I felt confident in the building blocks, I made some sketches in MS Paint to visualize the flow. This is what I drew that made it click for me, and it was the inspiration for making animations to explain the sorting algorithm to people reading this post:

Credits & Inspiration

Animations made with Manimation Commuity.

Article on parallel prefix sum.

Talk on parallel prefix sum.

Boids project in Unity using compute shaders and prefix sum.